Join our next live session with Nacha on modern, programmable ACH on February 12th.Register here →

How to Scale a Ledger, Part VI: Concurrency Controls, Performance, and More

In the final part of our series, we dig deeper into the final two ledger guarantees—concurrency controls and performance—and touch on a few advanced topics.

This post is the sixth and final chapter of a broader technical paper, How to Scale a Ledger. Here’s what we’ve covered so far:

- Part I: Why you should use a ledger database

- Part 2: How to map financial events to double entry primitives

- Part 3: How a transaction model enables atomic money movement

- Part 4: How ledgers support recording and authorizing

- Part 5: More about ledger guarantees: immutability and double-entry

In this chapter, we’ll dig deeper into the final two ledger guarantees—concurrency controls and performance—and touch on a few advanced topics.

Concurrency Controls: Preventing Double Spends

Guarantee: It’s not possible to unintentionally spend money, or spend it more than once.

As usage on your app scales, race conditions become a threat; however, concurrency controls in the Ledgers API prevent inconsistencies.

We’ve already covered how our Entry data model, with version and balance locks, prevents the unintentional spending of money (see Part IV). But nothing we’ve designed so far prevents accidentally double-spending money.

Here’s a common scenario:

- A client, GoodPay, sends a request to write a Transaction.

- The ledger is backed up and is taking a long time to respond. GoodPay times out.

- GoodPay retries the request to write a Transaction.

- Concurrently with Step 3, the ledger finished creating the Transaction from Step 1, even though GoodPay was no longer waiting for the response.

- The ledger also creates the Transaction from Step 3. The end state is that the ledger moved money twice when GoodPay only wanted to do it once.

Idempotency Keys

How can the ledger detect that GoodPay is retrying rather than actually trying to move money more than once? The solution is deduplicating requests using idempotency keys.

An idempotency key is a string sent by the client. There aren’t any requirements for this string, but it is the client’s responsibility to send the same string when it’s retrying a previous request.

Here’s some simplified client code that’s resilient to retries:

Notice that the idempotency key is generated outside of the retry loop, ensuring that the same string is sent when the client is retrying. On the ledger side:

- They keys must be stored for 24 hours to handle transient timeout errors (when it sees an idempotency key that’s already stored, it returns the response from the previous request).

- It’s the client’s responsibility to ensure that no request that moves money is retried past a 24-hour period.

Efficient Aggregation: Performance of Balance Reads

Guarantee: Retrieving the current and historical balances of an Account is fast.

Our ledger is now immutable, balanced, and handles concurrent writes and client retries. But is it fast?

You may have already noticed in the “Entry” section above that calculating Account balances is an O(n) operation, because we need to sum across n Entries.

That’s fine with hundreds or thousands of Entries, but it’s too slow once we have tens or hundreds of thousands of Entries. It’s also too slow if we’re asking the ledger to compute the balance of many Accounts at the same time.

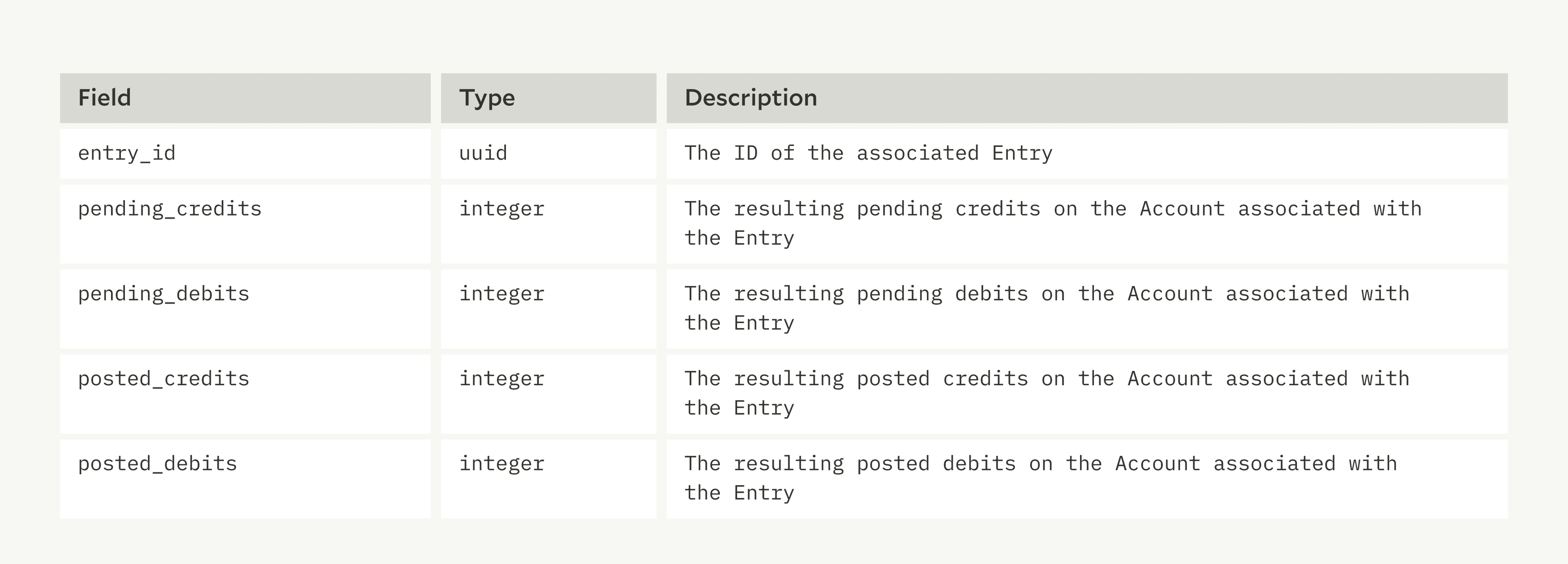

We solve this problem by caching Account balances, so that fetching the balance is a constant time operation. We can compute all balances from pending_debits, pending_credits, posted_debits, and posted_credits—so those are the numbers we need to cache.

We propose two caching strategies, one for the current Account balance and one for balances at a timestamp.

Current Balances

The current Account balance cache is for the balance that reflects all Entries that have been written to the ledger, regardless of their effective_at timestamps. This cache is used for:

- Displaying the balance on an Account when an

effective_attimestamp is not supplied by the client. - Enforcing balance locks (a correct implementation of balance locking relies on a single database row containing the most up-to-date balance).

Because this cache is used for enforcing balance locks, it needs to be updated synchronously when authorizing Entries are written to an Account. This synchronous update is at the expense of response time when creating Transactions.

So that updates to the balance aren’t susceptible to stale reads, we trust the database to do the math atomically. Let’s use this example Entry to show how to update the cache:

The entry contains a posted debit of $100, so we atomically increment the pending_debit and posted_debit fields in the cache when it’s written.

We also need to update the cache when a Transaction changes state from pending to posted or archived.

- If we archived a pending debit of $100, we’d decrease

pending_debitsby $100 in the cache. - Similarly, if the pending debit was posted, we’d increase

posted_debitsby $100.

Effective Time Balances

We also need to support fast reads for Account balances that specify an effective_at timestamp. This caching is more complex, so many ledgers avoid it by not supporting effective_at timestamps at all, at the expense of correctness for recorded Transactions.

We’ve found a few methods that work, and we’ll cover two approaches here:

1. Anchoring: Assuming that a constant number of Entries are written to an Account within each calendar date, we can get a constant time read of historical balances by:

- First, caching the balance at the end of each date

- Then, applying the intra-day Entries when we read.

Our cache saves anchor points for balance reads. For every date that has Entries, the cache can report the end-of-day balance in constant time. The effective date cache should store the numbers that are needed to compute all balances.

Let’s assume we’ve cached the balance for 2025-07-31, we can get the balance for 2025-08-01T22:22:41Z with this algorithm:

- Get the cache entry for an

effective_datethat’s less than or equal to2025-07-31. Storelatest_effective_dateas the effective date for the cache entry found. - Sum all Entries by direction and status for

latest_effective_date <= entry.effective_at <= '2025-08-01T22:22:41Z' - Report the Account balance as the sum of step 1 and step 2.

Keeping the cache up-to-date is the bigger challenge. While we could update the current Account balance cache with one-row upsert, for the effective date cache we potentially need to update many rows: one for each date between the effective_at of the Transaction to be written and the latest effective date across all Entries written to the ledger.

Since it’s not possible to update the cache synchronously as we write Transactions, we update it asynchronously. Each Entry is placed in a queue after the Transaction is initially written, and we update the cache to reflect each Entry in batches, grouped by Account and effective date.

What happens if a client requests the balance of an Account at an effective time, but recent Entries have not yet been reflected in the cache?

We can return an up-to-date balance by applying Entries still in the queue to balances at read time. Ultimately, an effective_at balance is:

2. Resulting Balances: When individual Accounts have many intraday Entries, the anchoring approach won’t scale. We can increase the granularity of the cache to hours, minutes, or seconds, but we truly get constant time access to Account balances by storing a resulting balance cache.

Each entry in the cache stores the resulting balance on the associated Account after the Entry was applied.

The cache is simpler now, but updating it is more expensive.

When an Entry is written with an earlier effective_at timestamp than the max effective_at for the Account, all cache entries for Entries after the new effective_at timestamp need to be updated.

A detailed discussion of how the cache is updated is beyond the scope of this paper. It’s not possible to update every cache entry atomically in the database, so each cache entry needs to keep track of how up to date it is.

Monitoring Cache Drift

Reading Account balances from a cache improves performance, but it means that balance reads may diverge from source-of-truth Entries; it’s also possible for bugs to cause this divergence.

We handle this by:

- Regularly verifying each Account’s cached balances match the sum of Entries

- Automatically turning off cache reads for Accounts that have drifted.

- Providing tools for a backfill process and a runbook for on-call engineers to triage and address cache drift problems 24/7.

Advanced Topics: Scaling Beyond the Basics

Even with a ledger that is immutable, double-entry balanced, concurrency-safe, and fast to aggregate balances, your architecture may start to show limits under real-world pressures of product complexity and transaction volume.

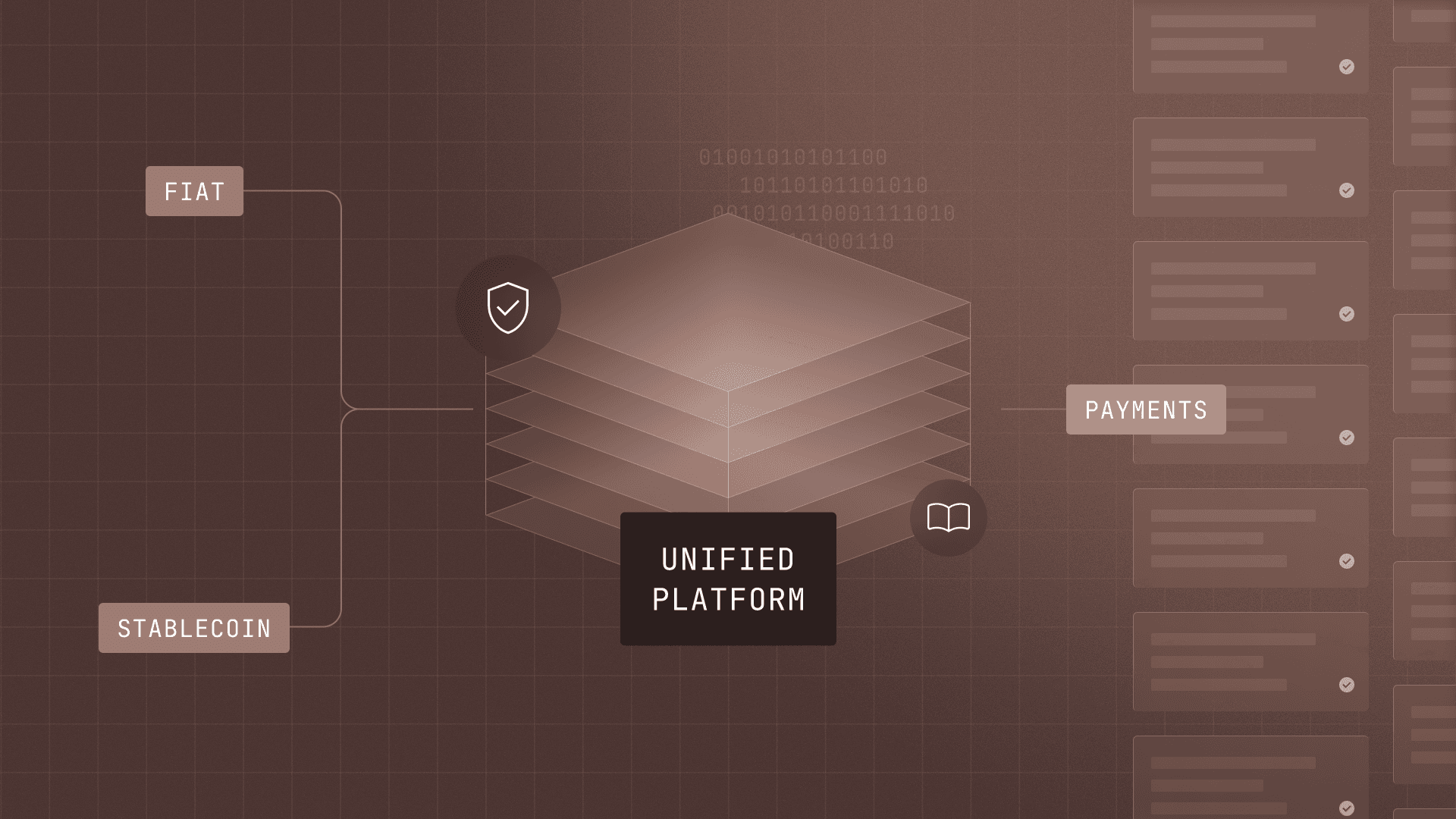

Here are four major areas of improvement we’ve invested in for our Ledgers API that a truly global, scalable ledger management process will need:

1. Account Aggregation for Reporting and UX

For many products and reporting needs, aggregating at the Account level is not sufficient. For example:

- Reporting: Showing business-level metrics would mean combining Transactions and balances for all revenue Accounts.

- Product Experience: Supporting sub-accounts, our Account groupings that, from your user’s perspective, share a balance and Transactions.

Solution: Account Categories (Graph-Based Aggregation)

Rather than flattening data structures or enforcing strict hierarchies, Modern Treasury Ledgers supports a graph model called Account Categories, which allows flexible aggregation across any group of Accounts.

This enables features like:

- Parent-child Account hierarchies

- Account-based permissioning

- Flexible rollups for balance and transaction reporting

2. Flexible Transaction Search

From generating Account statements to querying recent activity in a marketplace or brokerage platform, search is a core user requirement. Common search patterns include:

- Date-range filters for statement generation

- Fuzzy matching (e.g., allowing card customers to search Transaction history for a restaurant name versus the precise statement description)

- Custom sorting

Solution: Ongoing Tuning

Performant search is a constantly moving target as the volume of Transactions increases and the types of queries change. Some scaling tips include:

- Optimizing indexes based on query shape

- Partitioning high-volume tables

- Implementing pagination via cursor-based (not offset-based) methods

- Monitoring search latency

3. High-throughput Queueing for Writes

Leading fintechs easily surpass 5,000 QPS on their ledger infrastructure. At this scale, synchronous writes become a bottleneck.

Solution: Write-Ahead Queues

Implement an asynchronous Entry processing queue to buffer and batch ledger writes. Queues must be durable, idempotent, and designed to accept unlimited QPS for writes. Benefits of queueing include:

- Absorbing load spikes without dropping writes

- Decoupling application logic from DB write performance

- Supporting retries and monitoring independently.

4. Account Sharding Across Databases

At a global scale, the throughput and data storage requirements of a ledger can’t be accommodated by a single database. Some teams scale their ledger by migrating to an auto-sharding database like Google Spanner or CockroachDB. But more commonly, teams implement a manual sharding strategy, which can present challenges when Accounts are colocated:

- Maintaining double-entry atomicity across shards

- Aggregating balances and Entries efficiently across distributed Accounts

- Coordinating background processes (e.g., balance drift checks)

Solution: Insurances Within Sharding Layers

If you build your own layer, focus on ensuring:

- Strong consistency guarantees across Accounts

- Cross-shard Transaction management

- Metrics collection and alerting per shard

Conclusion: Engineering for Growth

We hope this series offered a clear overview of the importance of solid ledgering infrastructure that:

- Preserves financial correctness

- Scales to meet product demand

- Supports internal systems and end-user use cases

We also can’t overstate the amount of investment it takes to get right.

If you want a production-ready ledger that meets these requirements today and can scale with you, check out Modern Treasury Ledgers and reach out to us.

Read the full series:

Matt McNierney serves as Engineering Manager for the Ledgers product at Modern Treasury, and is frequent contributor to Modern Treasury’s technical community. Prior to this role, Matt was an Engineer at Square. Matt holds a B.A. in Computer Science from Dartmouth College.