Modern Treasury and Paxos Make It Easier for Businesses to Move Money with Stablecoins.Learn more →

Improving Build Reliability by Reducing Integration Tests

Hear from two of our software engineers about how the team's build reliability was improved through reducing integration tests.

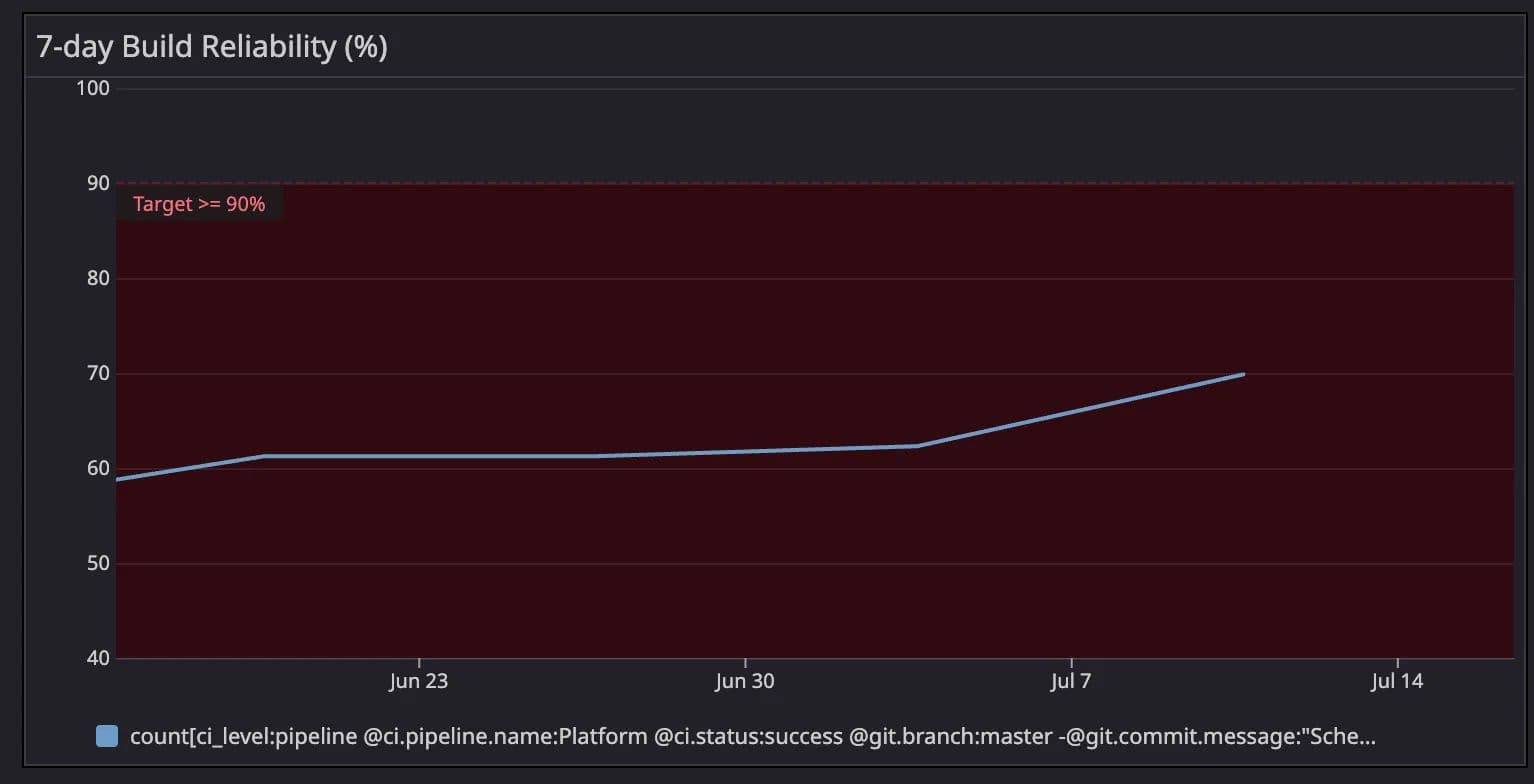

On the Modern Treasury engineering team, we had a build reliability problem. Build reliability—the percentage of builds encountering one or more failures when running our full battery of tests—had been lower than 60% for a while, and was blocking engineers from shipping fast. Unreliable builds can be very painful for a high velocity organization like ours that ships hundreds of commits per week. Flaky integration tests, written with Cuprite, Capybara and RSpec, were the culprit.

What We Tried First: Conservative Measures

Quarantining

Our first line of defense was identifying, isolating, and fixing flaky tests. To keep build reliability high and allow for asynchronous resolution of flakes, we introduced a quarantining system consisting of a GitHub app that would mark flaky tests with inline tags so that CI runs could skip them. Reliabot would also assign matching tickets to owning teams for asynchronous resolution of flakes.

Putting Potential Fixes Through the Paces

We added a separate CI pipeline, the “flake crusher,” that let engineers run flaky feature tests repeatedly to put potential fixes through the paces. Before approving pull requests claiming to fix flakes, we would ask for a link to a “flake crusher” run.

However, we soon realized that re-running unreliable tests in isolation wasn’t an effective strategy for reproducing their flaky behavior, because flaky tests are often affected by the timing or performance of other tests in the suite.

Spotting Flakes Earlier

We also tried adding a “flake protection” CI step that mimicked the “flake crusher” CI pipeline. If a pull request modified a feature test, this CI step would run the full test file many times against the pull request. New flakes could thus be surfaced before they were merged.

These were all good ideas, but even with these measures, we found we could not quarantine and fix tests fast enough. While fixing the flakes was definitely technically possible, there were some big problems:

- Every developer in the org would need to learn how to precisely tune integration tests.

- Every time a page changed, the matching tests would have to undergo tuning and we would constantly be generating more work for ourselves. Furthermore, it wasn’t obvious which tests were “matching” a code change until they got tagged by our CI flake tracker.

Updating the Test Framework

We considered that a more scalable solution than having every engineer learn the ways of tuning integration tests was to tweak our testing frameworks to make flakiness impossible. These were the most effective of the ideas that we tried:

- Adding waits before and after every click by writing “flake safe” spec helper methods. These methods would wrap the underlying Capybara methods and include waits. We’d also add custom linter rules to enforce that if there was a “flake safe” version of a method, it had to be used.

- If waiting timed out, auto-refreshing the page to give the test another chance.

- As with waits, adding auto-retrying into spec helper methods. An example of a situation where this could help: sometimes the content we’re looking for in a test isn’t clickable yet, so wait and retry until the element we’re looking for exists.

- Manually clearing network traffic between tests to prevent “pending connection” errors.

After deploying two engineers to try these ideas out for multiple quarters, we still didn’t have a satisfying framework. We saw some initial success, but as time went on, the return on investment decreased. One of the biggest problems with adding waits and retries is that sometimes tests just become slower while the non-determinism remains uneliminated.

Changing Test Tech

We also explored other testing frameworks that had promise. We looked into:

- Migrating our Chrome driver from Selenium to Cuprite. This enabled us to wait for all network connections to finish loading before trying to do any of the expectation checking, so it helped a bit, but didn’t solve our reliability problem.

- Using Playwright. Playwright seemed promising, especially since it includes auto-waiting, but unfortunately the feature is only available when writing native Playwright tests and doesn’t work with the Playwright driver for Capybara. It would be a significant lift to migrate our existing Capybara tests to native Playwright for something that still had a lot of moving pieces.

- AI tools for integration tests. These tools might have potential, but we were concerned that a test produced with such a tool would be a black box to debug.

Basically, changing test tech wasn’t really a feasible comprehensive solution. We weren’t getting the results we wanted, and realized that it was unlikely that more effort would lead to dramatically better results. The true problem was that having many interacting components in a test necessarily leads to inconsistent or unreliable behavior.

In our test pyramid, the part that should have been the smallest was actually quite large. We had to face this core problem, so we realized that decreasing the number of integration tests was the only answer.

Jest

Enter Jest. We could use Jest to replace integration tests with frontend tests that could provide us with similar code coverage. A frontend test can still flake, but because it fundamentally tests a smaller scope than an integration test does, flakes are meaningfully less likely. Moreover, Jest works well with asynchronous Javascript code: our tests return promises, and Jest waits for the promises to resolve.

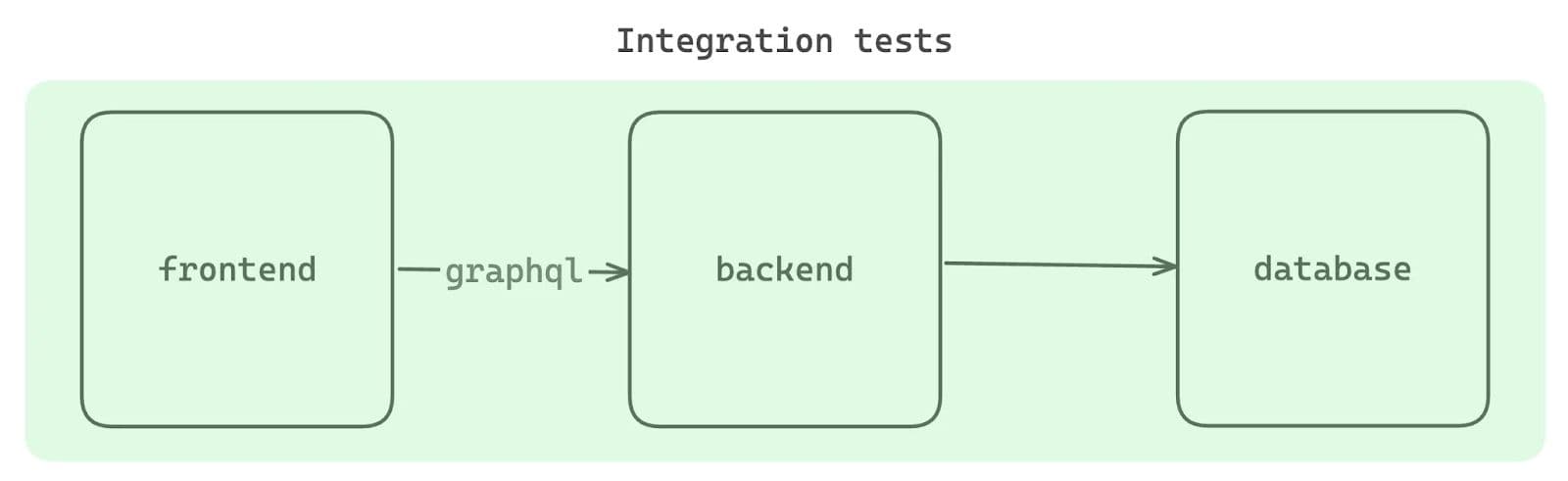

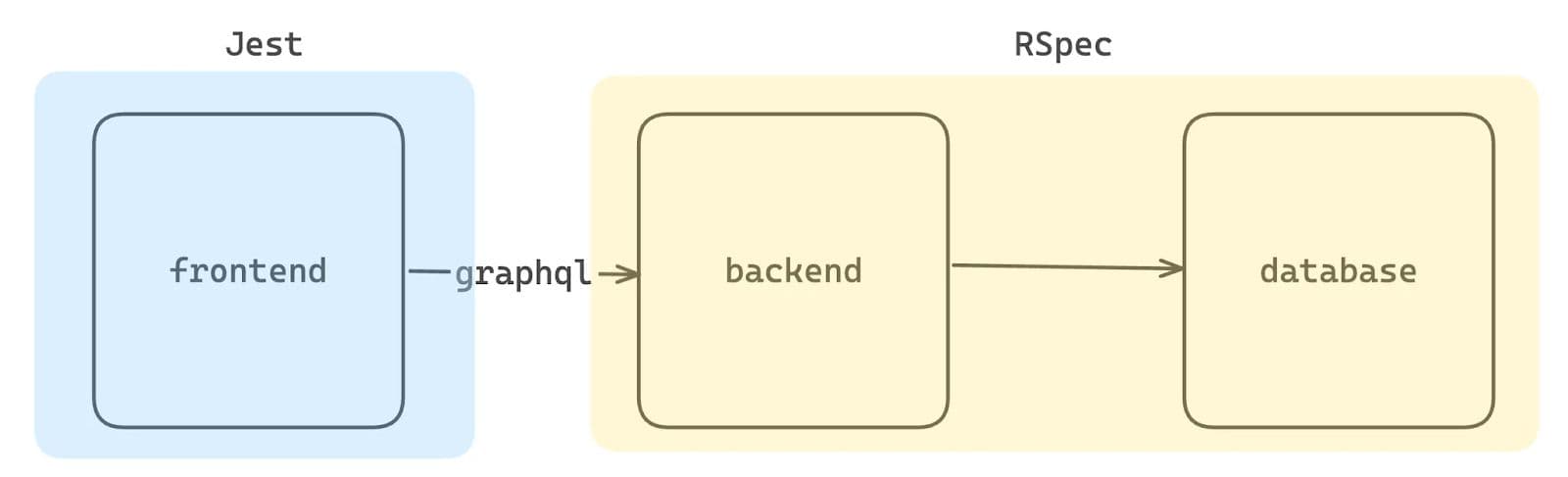

Would we lose any test coverage? Here’s what our old and new testing patterns look like:

Previously, our only test coverage for frontend code was through integration tests. In many cases, it’s a simple improvement to convert the same coverage to a unit test in Jest. As you can see in the diagram though, there is a small coverage gap: our Jest tests would be unable to ensure that the frontend and backend are hooked up correctly.

For this coverage, we would still need a small number of integration tests, but could get a drastic reduction in their number (around 85% of them could be rewritten as unit tests). So we settled on a policy of one integration test per page. We felt this gave us signal that pieces of the UI were hooked up to the right GraphQL operations, and that beyond this, comprehensive testing should occur in unit tests.

Now, we had a couple of new problems, but luckily we were able to find some great solutions.

Problem 1: Adoption

We wanted to make adoption as frictionless as possible for developers.

Solution: We wrote helpers on top of Jest and React Testing Library with most of the same method names as we had with Capybara. So far, this has been a win: we haven’t seen these helpers get overly bloated with random conveniences; most of what we need to test is covered by the methods we already have.

We also wanted only one integration test per page.

Solution: We grouped integration tests by page and added a linter rule to prevent a single test from visiting multiple pages. This setup:

- Prevents new integration tests from being added to a page

- Enforces rewriting an un-quarantined integration test in Jest instead of fixing it (this ratchets down the number of existing integration tests per page)

Problem 2: Generating Mock GraphQL Data in the Frontend

Actually, the most difficult part of writing these Jest tests was obtaining realistic mock data. With realistic data you’re able to properly fill out a frontend and exercise the various components.

Initial Implementation: Auto-Generated Mocks

Our GraphQL client, Apollo GraphQL, can automatically generate mocks based on your schema. Since this schema is strongly typed, we could use it to generate a value that satisfied the type constraints.

This initially sounded promising, but we found that the type constraints of the schema were far more relaxed than what was required for our frontend. For example, a field like status needs to be in a set of specific values like “completed” or “failed”, otherwise the frontend won’t know what to do with it.

Final Solution: Default Mocks With Easy Overrides

We instead opted to manually define a set of default GraphQL mocks for each of our resources, but make it easy for developers to override them. For example: suppose we had a GraphQL schema with a single type:

With this type, we define a default mock that fills in reasonable values and provides the option to override it.

We then use this mock when stubbing out any request for the payment resource.

When the component makes a GraphQL request for the payment resource, a mocked object would be returned instead. This gives us an easy way to define a default mock with the ability for it to be overridden. The entire thing is typed as well, which ensures this stays up-to-date with the schema.

While this approach works for simple types, it doesn’t work for more complex types. Suppose we extended the schema to group payments by account:

We must then update our mocks as follows:

This introduces several problems:

- Since we have a cyclical reference, we’re creating an infinite loop.

- We are unable to pass an override to

createAccountMock.

We solved these issues by returning objects that lazily evaluate, allowing us to have cyclical references. To enable this, we needed to store any nested references as functions that can be evaluated later. We used Proxy to override the get method to evaluate any functions it encounters:

By using this new setupMock function, we can convert our mock into:

Very nice. With this mock setup, we can now do things like:

The details of the lazy evaluation are hidden to the caller, so this plays nicely with Apollo GraphQL.

Making the Typescript Work

One nice feature of the initial implementation was that Typescript ensured that we stayed in sync with the schema. Since we’re now converting all references to these lazy functions, the types won’t work out of the box. Using some Typescript-fu we can convert all object references in GraphQL types into functions that return themselves:

With this, we can get complaints from the Typescript compiler if a mock is created with the wrong type:

We highly recommend checking out these Typescript challenges to better understand working with Typescript types.

We found providing a sane default for our resources — with the ability to override — would ease the burden on developers. These mocks gave us a frontend that loaded correctly in most cases; the data just needed to be tweaked to suit the test case. We also wrote a script to generate GraphQL queries with every field selected for each resource. Since our seed data fills in most things, this worked pretty well for us, and we only needed a bit of manual editing to fill in the gaps. Moving forward, we will be writing most of our new frontend tests in Jest.

Results

Is Jest completely free of flaky behavior? Not exactly—it’s always possible to write a race condition. But frontend unit tests have far fewer components and are way easier to reason about.

Any form of randomness or race condition can cause flaky behaviour. In RSpec and Jest it’s usually an issue with the test contents. With integration tests, most of the time it’s an issue with the framework or the scope of what’s being tested.

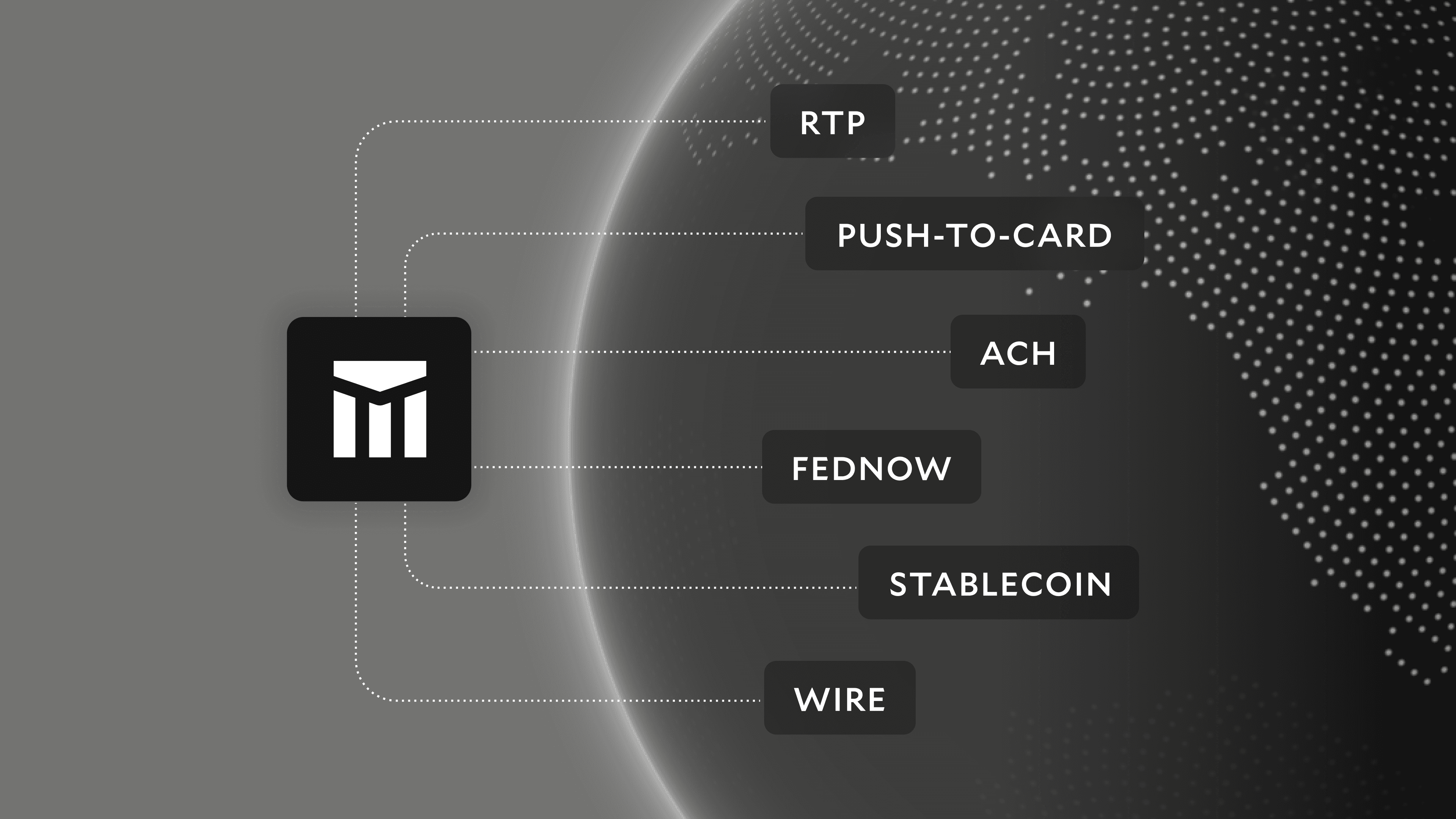

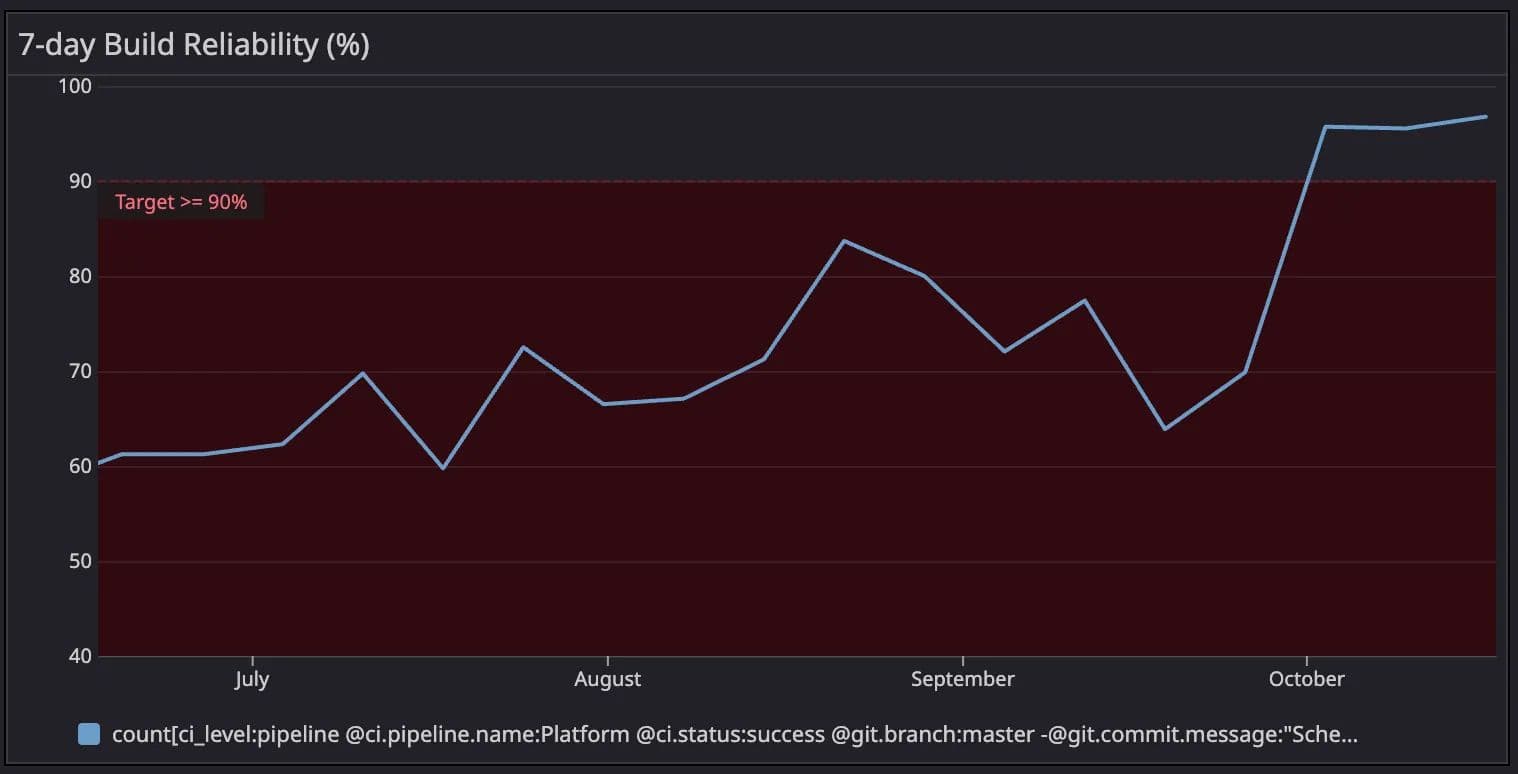

This is what our build reliability looked like over the period that we made these changes.

If you’re curious to learn more about what we do here, Modern Treasury’s engineering team is hiring, and we would love to hear from you!

A flaky test is a test that fails non-deterministically.

Our stack is Typescript, React, GraphQL, Ruby on Rails and PostgreSQL.

Continuous Integration