Join us at Transfer 2025 to hear how industry leaders are building payments infrastructure for a real-time world.Register Today →

Behind the Scenes: How We Built Ledgers for High Throughput

A high-throughput ledger database is essential infrastructure for companies moving money at scale. Learn how Modern Treasury built our Ledgers product for enterprises that track payments at volume and velocity.

Why Fast Ledgering Matters

Money movement requires precision, whether it’s moving money between bank accounts or just changing ownership of funds in a database. Even a one-cent discrepancy can send accountants in a mad scramble to figure out what went wrong—let alone the real-world stories we’ve heard of companies at scale temporarily losing track of millions of dollars.

We're hiring!

Modern Treasury is looking for engineers to join our Ledgers team and help power the day-to-day operations of companies like ClassPass, Bilt, and Splitwise.

Want to sleep soundly at night, knowing your money is all accounted for? Be disciplined about how you track money movement at the source: your applications. To do that, you need a ledger that is performant enough to be in the critical path of your money-moving code.

As we’ve built Modern Treasury Ledgers over the past few years, we’ve learned how difficult a task this is. Double-entry ledgering is difficult to scale in the context of financial products because:

- All money movement must be immutably recorded, so that history can be replayed.

- Money movement does not tolerate data loss of any kind. In some consumer apps you can report that a social media post has “about 1M views.” You can’t report that a Ledger Account has “about $1M.”

- Clients need synchronous responses in many use cases, like debit card authorizations or P2P money transactions.

We’ve invested a lot in building a ledger that can still be fast despite those constraints. Below I’ll tell you how we do it from an engineering perspective.

Performance Testing

We perform peak performance testing at least quarterly. Key to our strategy is matching real-world use cases. A load test in ideal scenarios based on our knowledge of how the system is implemented is useless. We constantly look at traffic patterns from our existing customers and dive deep into the requirements of our prospective customers to inform our load test setups.

Our latest performance test was based on the requirements of a high throughput investing platform. Every millisecond spent in the ledger means risk in this use case—from the instant a customer is quoted a price to when the trade is completed, the platform is on the hook for price fluctuations.

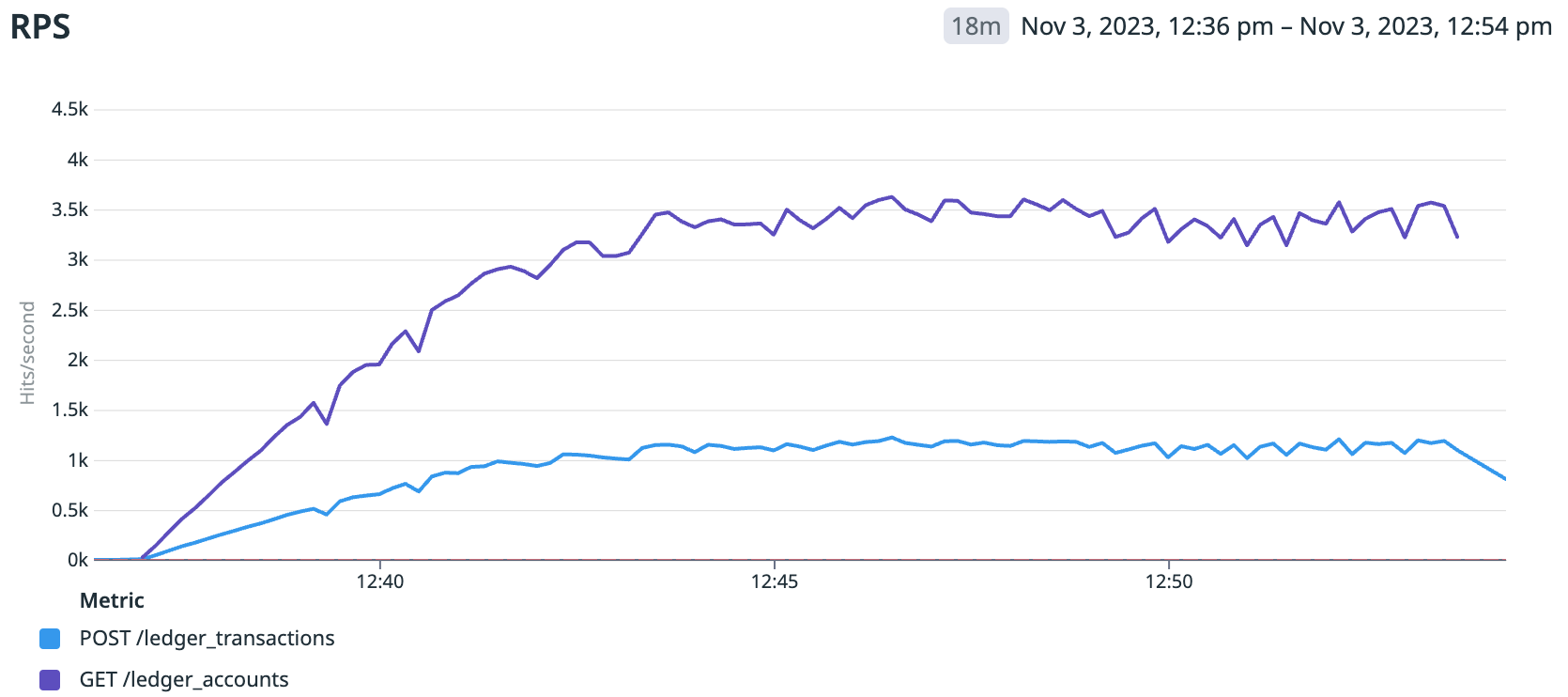

Our goal was to demonstrate we could write at least 1,200 transactions (4,800 entries) per second (POST /ledger_transactions) with 3,600 concurrent reads per second (GET /ledger_accounts). We also needed to maintain low latency and enforce our .

This does not represent a ceiling to Ledgers performance - our deployments are able to scale further on a customer-by-customer basis.

- Robinhood reported 2.2m daily average trades, or 25 per second.

- Charles Schwab reported 5.2m daily average trades, or 60 per second.

- Shopify reported purchases from 61m consumers over Black Friday weekend, or 177 per second.

- Stripe reported 300m transactions over Black Friday weekend, with a peak of 93,304 transactions per minute. That’s 868 transactions per second sustained, and 1,555 transactions per second peak.

- Amazon reported 100K items bought per minute over Black Friday ‘22, or 1,666 per second. In ‘23, Amazon reported 375m orders on Black Friday over two days, which is 2,170 transactions per second.

Here’s how we performed.

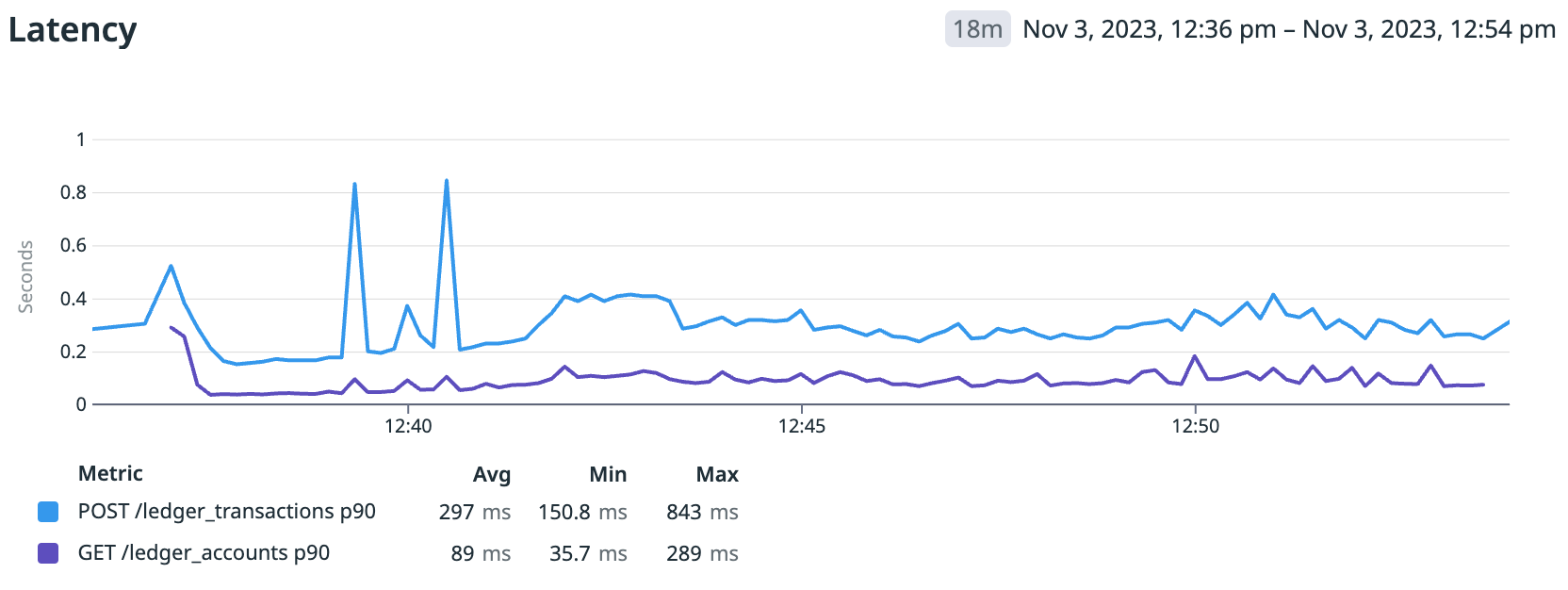

Latency was generally constant (aside from brief spikes in the beginning caused by ECS autoscaling kicking in), with 297ms average p90 latency for creating Ledger Transactions, and 89ms average p90 latency for reading Ledger Account balances.

We were happy with these results. We could easily handle the volumes quoted from Robinhood, Charles Schwab, and Shopify. We could handle Stripe’s average load and likely could handle bursts of their peak loads from Black Friday as well. We’re within striking distance of Amazon’s peak loads as well. Customers are always bringing us new use cases, and we’ll continue investing in performance to meet their needs.

Achieving this kind of scale required a lot of investment in our Ledgers API. Next, I’ll walk through some of the key ideas that got us here.

Balance Caching

As we explored in our How to Scale a Ledger series, the balance of an Account must always equal the sum of its Entries. The simplest implementation is O(# of Entries) — the ledger sums up the Entries whenever the balance of an Account is requested. That’s fine with hundreds or thousands of Entries per Account, but it’s too slow once we have tens of thousands or hundreds of thousands of Entries. It’s also too slow if we’re asking the ledger to compute the balance of many Accounts at the same time.

At the core of Modern Treasury’s Ledger are caches of Account balances, so that balance reads are an O(1) operation. We maintain three caches:

- Total balance cache: This cache stores the running sum of all Entries written to an Account.

- Effective time balance cache: This cache stores historical balances, which are used when an Account is fetched with an

effective_attimestamp. This cache supports backdating Entries, so that balance history can match an external source of truth. Imagine a customer initiates a loan payment right at midnight when it’s due, but your backend system doesn’t get to it for a few seconds. You likely do not want to mark the customer as past due when it was your system that was delayed, not the customer’s payment. - Resulting balance cache: We store the total Account balance as it was when each Entry is written to it as

resulting_ledger_account_balances. This cache can power Account statements, such as for a credit card or bank account, that typically show resulting balances after each Transaction. Also, this cache is used when clients request the state of an Account at a particularlock_version.

These caches not only power low latency balance reads, but also enable some advanced features such as:

- Balance locking: Entries can specify conditions on the resulting Account balance, which allows clients to require that an Entry only go through if there is sufficient balance on an Account. The balance cache enables us to use Postgres row-level locking to guarantee serial writes to Accounts when balance locks are present.

- Balance filtering: We use the balance caches to quickly filter Accounts that meet specified balance criteria, for example all Accounts that had more than $0 at a certain

effective_attime. - Ledger Account Categories: Categories are graphs of Accounts that can report the total balance of all contained Accounts. Because each Account’s balance is cached, it’s performant to report aggregate sums even in large Categories. We have additional caching for Categories that precomputes common graph operations, such as recursively fetching all Accounts that exist within the Category graph.

Double-Entry at Scale

After getting consistently fast reads by implementing caching, we turned our attention to writes. With real-world use cases, we discovered a “hot account” problem, an issue inherent to double-entry accounting at scale. In most double-entry ledgers, there is a common Account that is present on most Transactions. Consider a debit card ledger. The simplest debit card authorization transaction with double-entry Accounting would have two Entries:

| Field | Value |

|---|---|

id | card_entry_1 |

account_id | card_account_id |

status | pending |

direction | debit |

amount | 1000 |

| Field | Value |

|---|---|

id | bank_entry_1 |

account_id | bank_account_id |

status | pending |

direction | credit |

amount | 1000 |

card_entry_1must be processed synchronously—the caller needs to immediately respond to the card network. Additionally, Entries must be written one-at-a-time to the card Account, to ensure that no Entries are allowed that would cause the Account to overdraft.bank_entry_1represents the bank settlement Account. Every debit card authorization Transaction across the program will contain an Entry for this Account.

The bank settlement Account is a “hot account,” because it’s present on most Transactions written to the Ledger. We’ve found that at high load, contention for the single balance cache row causes Transaction write latency to spike dramatically.

To address this problem, we designed and implemented a hybrid-async API. This approach enables clients to specify on each Entry whether it must be processed serially or if it can be asynchronously batch processed. The resulting architecture looks like this:

Sync / Async Router: A lightweight component that determines whether an Entry can be processed asynchronously. If the Entry has a balance lock or passes the show_resulting_ledger_account_balances flag, then the client needs an immediate response and the Entry must acquire an exclusive lock on the corresponding Account. Otherwise, the Entry is sent to the Async Transaction Queue.

Balance Cache and effective_at Cache: All Entries eventually make it to the balance caches, which live in a Postgres database. Our Get Ledger Account endpoint reads directly from this balance cache, ensuring read-after-write consistency when it’s needed.

Async Entry Queue: This queue, implemented with AWS SQS, stores all Entries that can be processed asynchronously. A scheduled job fetches batches from the queue to be processed. We target processing all Entries within 60s, and in practice, our p90 time to process is 1s. Depending on the throughput of async Entries, we tune how long the job waits to gather a batch. Wait too long, and we risk delayed Entry processing. Wait not long enough, and we’re writing to the balance cache too frequently, risking database contention.

Read Database: This database, a read replica, serves our List Endpoints. Our read replicas are auto-scaling.

All of these components come together to enable both read-after-write consistency on the accounts where it matters, and super fast batched writes on hot accounts. Our API allows clients to choose what kind of performance they need on each Entry they write.

Scaling for Each Customer

1,200 transactions per second tends to suffice for most use cases, but if that was the upper limit for all our customers combined, we’d only be able to support a few high throughput customers. To enable us to scale independent of the number of customers we have, we’ve migrated to a cells-based architecture.

At a high level, a cell is a full deployment of Modern Treasury. A lightweight routing layer takes in traffic from our customers, and routes them to the correct cell.

We theoretically can have infinite cells, and we deploy to them progressively to limit the blast radius of any issues. Some cells may contain many customers, some may have just one. We’ve also invested in tooling to move customers between cells, should their reliability or throughput requirements change.

Cells let us tune parameters to specifically match the needs of certain customers. Some parameters we’re able to tweak include:

- Database size: We can choose different sizes of AWS components per cell to match customer requirements.

- Ledger Entry queue processing: We can modify Entry processing batch sizes to meet the throughput requirements of different customers.

- Database connection multiplexing: At high throughputs, we want to limit the number of connections to our database to preserve memory. We achieve this through multiplexing, and can configure this per cell.

- Auto-vacuum thresholds: Depending on the volume of writes to a given table, we tune the threshold at which Postgres does auto-vacuums, which among other things help query plans be as efficient as possible.

- Custom indexes: Occasionally, customers have specific needs for search queries on our List endpoints. We can design custom indexes per customer to make these queries fast, without imposing latency on other customers.

Cells give us the best of both worlds: for the most part, Modern Treasury engineers can build one platform, while still customizing deployments for specific customers as needed.

What’s Next

We’re not done on our quest to build the most feature-rich and performant application ledger. Some projects we have on the horizon:

- Sharding: Cells allow us to shard our data by customer. In anticipation of a single customer’s data or throughput requirements not fitting a single cell, we’re developing a sharding strategy for our largest tables.

- Faster search: Our List endpoints are powered by indexes on read replicas. This has worked well so far, but we need to develop specific indexes for nearly every new query, and every new index imposes latency on our writes. We’re working on ways to make use of search-optimized databases to better suit arbitrary queries.

- Cold storage: Over time, individual transaction tables become too large, making it difficult to perform migrations and affecting query latency. We’ll devise a method to safely move old data to cold storage, making queries for recent data fast while still retaining an immutable log of all history.

Ledgers is designed to help engineering teams track money at any scale and velocity. If you need a performant database in your payments stack, you can learn more about Ledgers here. To learn how we work with enterprises, get in touch with us here.

Try Modern Treasury

See how smooth payment operations can be.